In the spring of 2017, a small team of marketing analysts in Midtown Manhattan were puzzling over a seemingly simple problem. Their client — a fast-growing consumer lender — had aggressive growth targets and wanted to achieve those goals by doing more direct mail.

A lot more direct mail.

Since direct mail was performing exceptionally well for them, this strategy seemed to make sense, at least on the surface. Direct mail was also regarded as the preferred channel for lending prospecting, since other “unsecure” media like display, social and email presented privacy risks.

The Financial Brand Forum Kicks Off May 20th

Explore the big ideas, new innovations and latest trends reshaping banking at The Financial Brand Forum. Will you be there? Don't get left behind.

Read More about The Financial Brand Forum Kicks Off May 20th

Navigating the Role of AI in Financial Institutions

83% of FI leaders agree investing in AI is essential for 2024 but how you leverage AI is instrumental in success and meeting customer expectations.

Read More about Navigating the Role of AI in Financial Institutions

But there was a problem: the prospects who qualified for these loans represented a relatively small and fixed population. That left the marketing team with two stark choices, neither of which was particularly attractive:

1. They could get more prospects. The only way to do this would be to look outside of the current pool of qualified borrowers – people with higher risk profiles. Unsurprisingly, this option was not even on the table for the client.

2. They could send more direct mail to the existing pool of qualified prospects. In other words, a qualified prospect would receive six, eight or even ten pieces of direct mail over the course of the year, rather than the current three or four. The downside of a high-frequency approach like this is that it can wreak havoc on performance; more direct mail typically delivers a small percentage of additional conversions, significantly driving up the cost per conversion. In other words, the extra juice would not be worth the squeeze.

They were stuck. What to do?

The analytics team had been kicking around a handful of hypotheses surrounding this dilemma. One of these ideas seemed promising. It was a long shot, but if it could be tested and validated, it might resolve everything.

Here was the idea: within the small, fixed population of qualified lending prospects, could there be sub-segments that respond favorably to more frequent direct mail touches? If these sub-segments existed, they could potentially be profiled and targeted, and the number of touches could be optimized accordingly. In other words, more direct mail would be sent to the audiences where it would have the most impact, while the rest would receive current levels.

To investigate, the analytics team chose to back-test direct mail performance over a period of 10 DM drop cycles, looking closely at how conversion rates changed as the cumulative number of direct mail pieces were sent to the audience. As complex as this may sound, this analysis was actually a relatively straightforward exercise, using existing performance data and off-the-shelf analytic tools. As they started pulling the reports together, fingers were crossed that promising segments would emerge.

What they saw far exceeded their expectations… and by a long shot. The data showed that, among the most desirable prospects in the population (highest deciles), more direct mail pieces correlated with significantly higher conversion rates. In other words, qualified prospects who were mailed 10 times were significantly more likely to convert than a prospect mailed only 5 times.

Using nothing more than some fairly simple analysis, this small team had discovered that they had barely scratched the surface of DM performance. More direct mail could help drive tens of millions of dollars in new loans- and without impacting cost-efficiency.

A few hours of work. Tens of millions of dollars in additional sales.

This story underscores the importance of analytics, testing and learning. It also highlights a key distinction between a skillset and a mindset:

- Analytic Skillset: the competency of conducting analysis, designing and implementing tests, using sound measurement and analytic methodologies, and the application of statistical best- practices to ensure that findings are valid.

- Analytic Mindset: the attitudes about analytics, testing and learning, which drive business questions and hypotheses. Mindset also drives decisions and prioritizations on what is to be tested, how the results will be used, and the resources that should be allocated to it.

In financial services, most firms have a high level of analytic skill. They have statisticians and strategists at their disposal, marketing teams who are analytically oriented, and access to vast amounts of customer and prospect data. Even if these things are not directly under the firm’s roof, “skill” can be purchased. It’s a commodity.

What isn’t a commodity (and is actually quite rare) is a high-level analytic mindset. The example above showed how a small team with a few basic tools uncovered extremely valuable insights. They did this by framing the problem around a business question, and by interrogating that question with creative hypotheses.

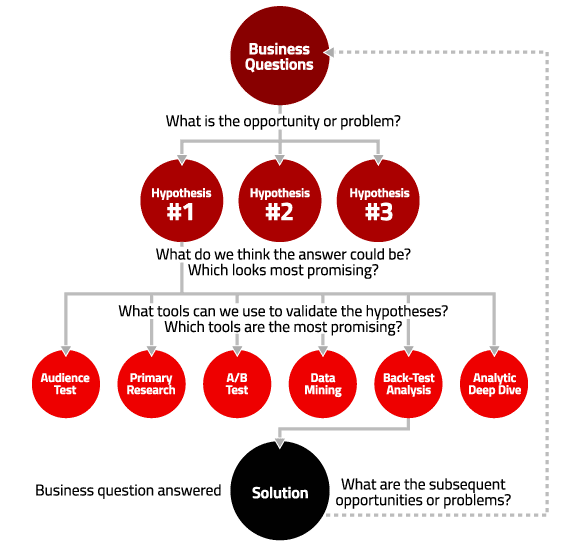

Here is a simple framework that helps to illustrate this kind of high-level analytic mindset. It works from the top down, starting with the business question:

Many financial services firms look at testing, learning and analytic opportunities tactically, from the bottom up. They think about what they could test or analyze given their skillset. For example:

- Which works better: one direct mail or two direct mails? (audience test)

- What products are millennials buying from us? (data mining)

- Which works better on the landing page: a picture of a bank branch, a family, or a child with his dog? (A/B/C testing)

- Which offer works better: $100 bonus or $150 bonus? How much better? (A/B testing)

- What do people want from us? (primary research)

To be clear, there is nothing wrong with these examples per se. In fact, learnings from these questions can deliver significant and immediate impact.

The flaw is the way in which these tests were devised. These kinds of tests are typically brainstormed from a skillset lens (“What’s testable?” or “What haven’t we done yet?”), and that is backwards. Backwards, or bottom-up thinking, can mean missing out on the really big, important insights — “What opportunities exist?” — the kind of discoveries that can shift the business 10x.

[leadgen

This is my call to action to you: Think about your organization’s analytic, testing and learning mindset. Are you digging deep into the business problem and spending time on hypotheses? Are you considering big, bold arguments that could be proven or disproven? These questions will help lead you to the right methodologies and skillsets that can simply, yet dramatically, boost your own business results.