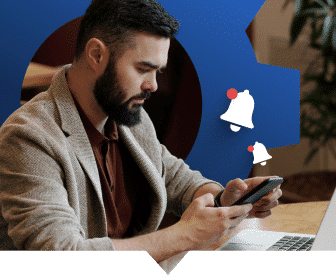

Up until now, Apple had been basking in worldwide admiration of its new payment card, devised in partnership with Goldman Sachs and rolled out in August 2019. But that came to a screeching halt on November 7, 2019 when David Heinemeier Hansson, a tech entrepreneur who created Ruby on Rails and the founder of Basecamp (a popular project management package), tweeted this message from his @DHH account on Twitter:

“The @AppleCard is such a [bleeping] sexist program. My wife and I filed joint tax returns, live in a community-property state, and have been married for a long time. Yet Apple’s black box algorithm thinks I deserve 20x the credit limit she does. No appeal works.”

The tweet alleging that the algorithm behind the Apple Card’s credit evaluation process discriminates against women quickly went viral.

The controversy raises a number of red flags. Your data analytics program can land you in hot water. Financial institutions need to take a fresh look at their algorithms and artificial intelligence. Providers of financial services can find regulatory trouble on their doorstep overnight, quite apart from any official process like examinations, in the connected digital world.

The situation also underscores the volatile power of social media when mobs take brands to task, and how communication through this channel eclipses traditional media in financial marcomm strategies.

That’s a lot of impact stemming from just one little tweet.

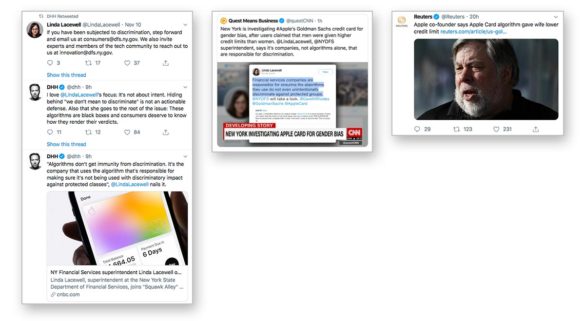

Tweets from David Heinemeier Hansson, accusing Apple Card of gender discrimination, started a tweetstorm, including ripples like the CNBC tweets promoting Hasson’s interview with the channel.

Tweets from David Heinemeier Hansson, accusing Apple Card of gender discrimination, started a tweetstorm, including ripples like the CNBC tweets promoting Hasson’s interview with the channel.

Hansson’s tweetstorm prompted more stories from people with similar experiences, and soon thereafter Twitter was rife with multiple threads echoing the original accusation.

One of the more notable tweets following up on Hansson’s complaint came from Steve Wozniak, the co-founder of Apple. “The same thing happened to us,” Wozniak said about the card he got from the company he helped create. “I got 10x the credit limit. We have no separate bank or credit card accounts or any separate assets. Hard to get to a human for a correction though. It’s big tech in 2019.”

Wozniak subsequently went on television to speak about the matter.

Read More: Apple Card Rollout Threatens Traditional Financial Institutions

Industry Cloud for Banking from PwC

PwC’s Industry Cloud for Banking applies our deep industry knowledge to your specific business needs

Navigating the Role of AI in Financial Institutions

83% of FI leaders agree investing in AI is essential for 2024 but how you leverage AI is instrumental in success and meeting customer expectations.

Read More about Navigating the Role of AI in Financial Institutions

Tweets Prompt Regulator to Launch Official Investigation

Even before Wozniak had weighed in, a very significant set of eyes hit the stream — those of Linda Lacewell, New York State’s Superintendent of Financial Services, the top banking regulator there.

After seeing all the tweets up to that point, Lacewell commented on Twitter and in a blog under the auspices of her department on Medium, a blogging platform. She wrote — this was over a weekend, mind you — that based on what she’d seen, that her department would investigate whether the algorithm violated New York laws that prohibit credit discrimination on the basis of sex.

She noted in the blog that state anti-discrimination protections mean that “an algorithm, as with any other method of determining creditworthiness, cannot result in disparate treatment for individuals based on age, creed, race, color, sex, sexual orientation, national origin, or other protected characteristics.” She pointed out that her department had only the previous week opened an investigation against UnitedHealth Group concerning an algorithm that allegedly resulting in black patients receiving inferior care compared to whites.

In New York, she added in the blog, “we support innovation. … However, new technologies cannot leave certain consumers behind or entrench discrimination.”

Tweets from Linda Lacewell, New York State’s Superintendent of Financial Services, and about her own TV interviews about the controversy, heightened the tension. So did comments by Steve Wozniak, Apple Co-Founder.

Tweets from Linda Lacewell, New York State’s Superintendent of Financial Services, and about her own TV interviews about the controversy, heightened the tension. So did comments by Steve Wozniak, Apple Co-Founder.

“We will work to investigate what may have gone wrong,” wrote Lacewell, “and if the algorithm used by Apple Card did indeed promote unlawful discrimination we will take appropriate action. But this is not just about looking into one algorithm. DFS wants to work with the tech community to make sure consumers nationwide can have confidence that the algorithms that increasingly impact their ability to access financial services do not discriminate.”

Hansson applauded the regulator’s comments.

To Davia Temin, President and CEO of Temin and Company Inc., a crisis communications firm, the fast-moving situation underscored the risks of today’s communications and branding landscape.

“In the social media world, a vehement and powerful communicator, such as Hansson, can put any issue on the map. And bold-faced names, such as Steve Wozniak, can bump that issue up to viral,” said Temin. “Truth, often, ceases to matter, only perception does. But in this case, there are underlying worries that are quite legitimate, and those are what Apple and Goldman might have prepared for more.”

“Proactive risk assessment is no longer nice to have, it is mission-critical,” said Temin. Companies and their boards need to identify both predictable and “black swan” risks, and then practice how to deal — authentically — with them both before, during and after they hit.

So, where were Apple and Goldman Sachs while all this was happening?

Tweetstorm Migrates to Television

Regulators weren’t the only ones scanning the Twitterverse. The controversy soon spilled onto cable television and web channels..

CNBC interviewed Lacewell, who said one of the big problems with current innovation using algorithms is that “it’s a black box for consumers, it’s a black box for regulators. Consumers are entitled to know how these decisions are being made that affect their daily lives.”

“There’s no such person called ‘The Algorithm.’ The company needs to get transparent.”

— Linda Lacewell, N.Y. Superintendent of Financial Services

She made the point that intent didn’t matter, that if a mechanism or procedure has “disparate impact” on a protected group of consumers, it must be addressed. “Disparate impact” is a line of reasoning used in fair-lending lawsuits that holds that even if there is no obvious discriminatory treatment, behavior that impacts protected groups differently than whites leads to discriminatory effects and is a violation of fair-lending and housing laws.

Lacewell noted that in the tweetstream Hansson and others said that Apple Card consumer representatives had explained the differing results between spouses by blaming the algorithm.

“There’s no such person called ‘The Algorithm’,” Lacewell insisted. “The company needs to get transparent.”

On CNN International, Lacewell said she had been somewhat surprised about the anecdotal complaints brought out as people commented on Hansson’s ongoing tweets.

“Apple and Goldman are at the heads of their industries and it’s incumbent upon them to show some leadership,” said Lacewell. “I realize that this is an emerging area, but it’s incumbent now upon those companies to set some best practices and show others in the industry what they can follow.”

Hansson, appearing on CNBC after the regulator, said he agreed with Lacewell that intent didn’t matter.

“What matters is outcome,” said Hansson. “And we had sexist outcomes in our case. … And the company behind the algorithm is responsible for the outcomes of the algorithm.” He said the consumer has no chance to see the inputs that go into the algorithm nor to correct any mistakes.

“Apple is simply being cowardly by handing this over to Goldman Sachs. It is the Apple Card. It’s not the Goldman Sachs card.”

— David Heinemeier Hansson, Ruby on Rails

Hansson continued memes he’s posted on Twitter in the interview:

“Goldman Sachs is essentially asking us just to trust them. The financial industry and Goldman Sachs in particular has not earned that trust at all,” Hansson told CNBC. “They have to show proof that they are unbiased, that they are doing a fair and impartial evaluation of credit that applies equally to everyone as protected members. … Apple is simply being cowardly by handing this over to Goldman Sachs. It is the Apple Card. It’s not the Goldman Sachs card. No one cares that Goldman Sachs is actually the credit institution behind this. This is the Apple Card.”

Hansson and others in the tweetstream made the point that the Apple Card has been promoted as “designed by Apple, not a bank.” Yet Apple had been silent on the whole matter, earning more brickbats on Twitter.

Read More: What Banks & Credit Unions Can Learn from the Apple Card Experience

Goldman Sachs Responds to Allegations of Bias

For its part, Goldman responded twice on the matter through @gsbanksupport, the customer service handle for its Salt Lake City bank that partners with Apple on the card.

On Nov. 10, it responded more specifically, in a Twitter message signed by Andrew Williams, chief spokesperson. It reviewed factors that go into credit decisions, and said, “it is possible for two family members to receive significantly different credit decisions. In all cases, we have not and will not make decisions based on factors like gender.”

A day later, the company reiterated the latter point and added that, “we do not know your gender or marital status during the Apple Card application process.”

“Together with a third party, we reviewed our credit decisioning process to guard against unintended biases and outcomes,” stated Carey Halio, CEO at Goldman Sachs Bank USA, in the tweet.

Wozniak, meanwhile, appeared later the same day on a Bloomberg webcast and he spoke more positively about the Apple Card. (He noted he is nominally and minimally still on the Apple payroll.) He explained that he’d never meant his tweets to be more than a side comment to Hansson’s comments.

“You know, there were a lot of articles saying, ‘Oh, I was alleging gender bias as the only possible explanation.’ My wife thought that at first — I didn’t. I never used those words and would never say that, because I know that Apple itself is the least biased of all companies.”

Wozniak gave Goldman Sachs credit for responding by phone to his tweets, offering to review his wife’s situation specifically and stating more generally that it would improve processes, including making it easier to reach a human being to question credit and other decisions.

Controversy Outside of Usual Communication Channels

Interestingly, much of the conversation in this matter took place outside of “normal” communication paths. The main Goldman Twitter account never mentioned the matter, through the posting of this article. The New York State Department of Financial Services’ website makes no mention of anything that Lacewell said on Twitter, TV, nor Medium. The programs that aired the interviews recounted above put out their own tweets to publicize the stories.

Davia Temin addressed multiple aspects of this communications challenge.

A key matter is Apple’s long silence — in social media time.

“The biggest difficulty is that Apple’s marketing message has been: ‘Apple Card completely rethinks everything about the credit card. It represents all the things Apple stands for. Like simplicity, transparency, and privacy’,” says Temin. “And Hansson, who first brought this entire issue up to his 358,000 followers on Twitter, quotes [Apple’s] marketing on Twitter, saying: ‘I couldn’t make this up! This is literally the top pitch on the @AppleCard marketing page’.”

Even Goldman Sach’s response via Twitter wasn’t what it could have been, she said.

“When they finally came out with a partial explanation of the criteria used by the card to develop credit limits, it helped,” said Temin. “But it was too little, too late.”

Temin says this is a case where speaking with one voice from the start would have made more sense for the Apple Card partners.

A communications risk assessment back during product development would have helped, she suggests.

“Had the parties really thought through potential pushback to their card, they might have thought through speaking with one voice, rather than setting themselves off, one against the other,” she said. “And they would not have made marketing promises that they then must appear to walk back. There is one public, in a crisis … and organizations are held to account for what they have promised in their marketing as well as in their fine print. Dissonance between messages is what causes regulators to pay attention.”

Temin thinks something more proactive would have made a better message on social for the Apple Card. She said it could have been something like this:

“We have worked hard to wring out all bias from our legitimate decision making process … but we are seeing that may not be enough. While of course our processes are competitive and confidential, we are beginning immediately a process to unpack our algorithms to assure that there is no gender or any other kinds of bias baked into them. Obviously this is difficult, but that does not mean we will not try. And we will. We may not be able to reveal everything, but we will seek to be more transparent in this process, and look more deeply into our algorithms.”

Will this blow over, and see the Apple Card regain the luster it had had before?

Maybe, but likely not, said Temin.

“Gender issues and bias issues are sticky these days, and they’re not blowing over as easily as other issues,” she explained. “To avoid any taint in the reputation of the card, I would suggest some proactivity, not just bobbing and weaving. These days, true crisis management often means fixing something, not explaining it away.”

Read More: Will Social Media Finally Start Paying Off For Financial Marketers?

Do Americans Trust Algorithms?

“Technology is moving at the speed of light across our industries,” said Lacewell in one of her interviews. “It’s important to take a breath and look at how these new technologies are affecting customers.”

She suggested that “a lot of historic biases are at work, and when factors are kind of thrown into the mix, this can happen. So the important thing is that we get ahead of it.”

As Lacewell’s comments and others in the Tweetstream underscore, ultimately what’s being questioned is algorithms. “I don’t think that there’s someone at Goldman Sachs or at Apple, that sits down nefariously wanting to discriminate against women,” said Hansson in the CNBC interview. “But it happens all the time nonetheless.”

Temin thinks bankers and credit union executives can count on more and more such reaction as “the robots” move in. Experts in fair-lending law have been warning the industry for years about inadvertent bias and evolving bias — discrimination that develops as artificial intelligence “learns,” if it goes unchecked.

“The worry that bias is being built so deeply into algorithms that it will never get out is legitimate, and incendiary. It fuels the mistrust of AI and more,” said Temin. “Yet, I work with two AI startups, and they tell me that algorithms can be unpacked, and we can add some degree of transparency. I predict pressure will build for that to happen. And, again, the best way to manage crises is to fix their underlying issues, or at least authentically seek to.”

One of the frequent commenters to Hansson’s Apple Card tweetstream is Cathy O’Neil, @mathbabedotorg on Twitter. O’Neill published Weapons of Math Destruction in 2016, an attack on big data in general. She is now part of a consulting firm that that offers to certify algorithms as being accurate, fair and free of bias. On her website, O’Neil makes the point that in spite of efforts to portray them as neutral programs, an algorithm is “an opinion embedded in math.” She disputes those who say algorithms make things more objective.

The distrust in algorithms has ushered in legislation introduced in April 2019 by Democrats in both the House and Senate, the “Algorithmic Accountability Act.” The legislation would require companies to regularly evaluate their data tools to be sure they are accurate, fair and free of bias and discriminatory results.

O’Neil, through a multi-stage tweet, made the following suggestions to Apple, adapted below:

- Don’t deny this algorithm is a problem, and don’t pretend it isn’t your problem.

- Figure out what people actually want to know. The answer isn’t your valuable source code.

- The real problem is trust — trust that the algorithm isn’t unfair.

- Have evidence that you’ve done tests (describe them!) to validate that the algorithm is fair (define what you mean by that!).

- Have a third party audit the tests.

- Put in an appeal system in case people feel unfairly treated in spite of all your tests and evidence.

- Set an open source standard for others (i.e. other companies that extend credit) to follow that not only follows the law but goes beyond those requirements.Wozniak, in the Bloomberg video, was actually against digging into the algorithm much.

“I don’t believe looking into the algorithm is the solution. I don’t believe that changing the algorithm is the solution. I think it’s somehow being able to get individual attention in cases where the algorithm misses,” said Wozniak.

He added: “I decided a long time ago that having a good product isn’t even as valuable as having good support for what you’ve got.”